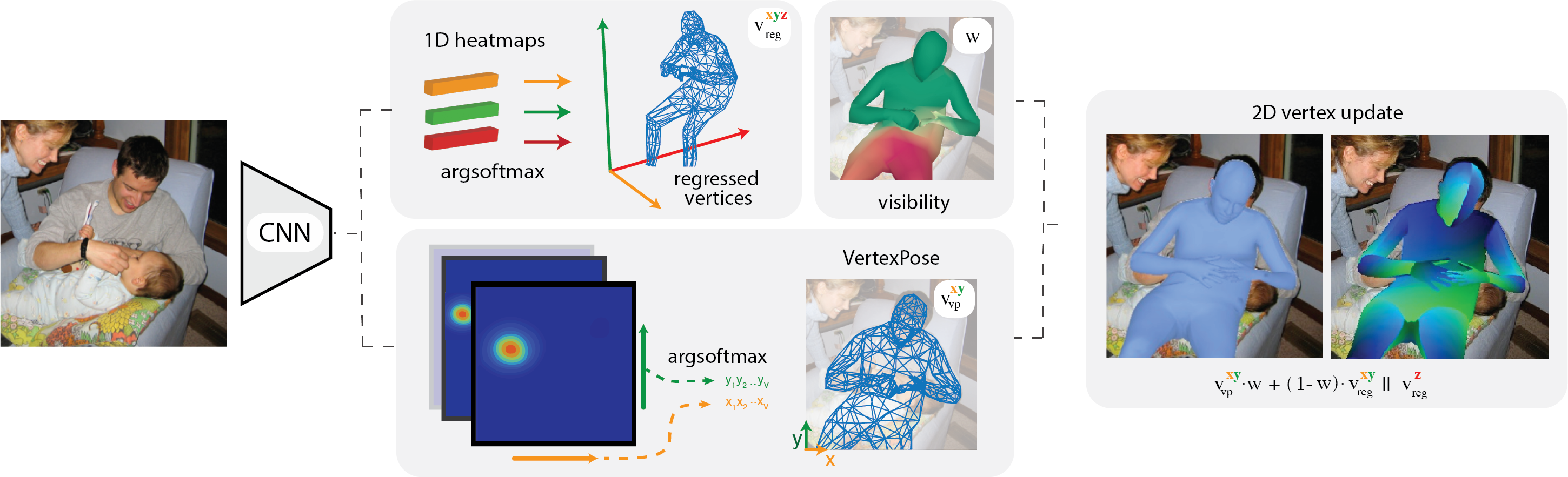

MeshPose Architecture

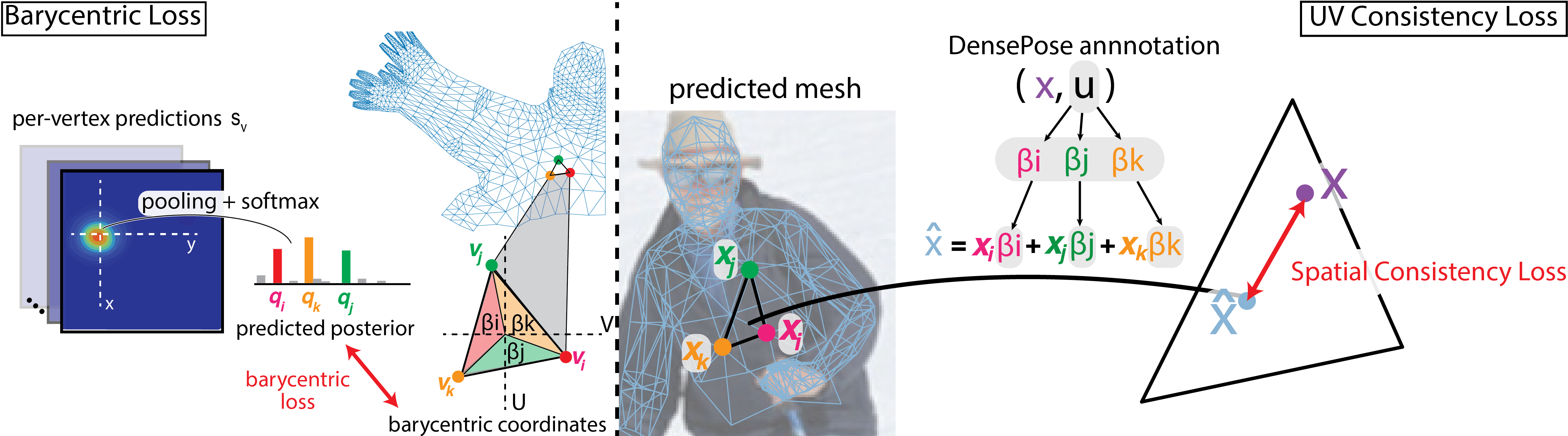

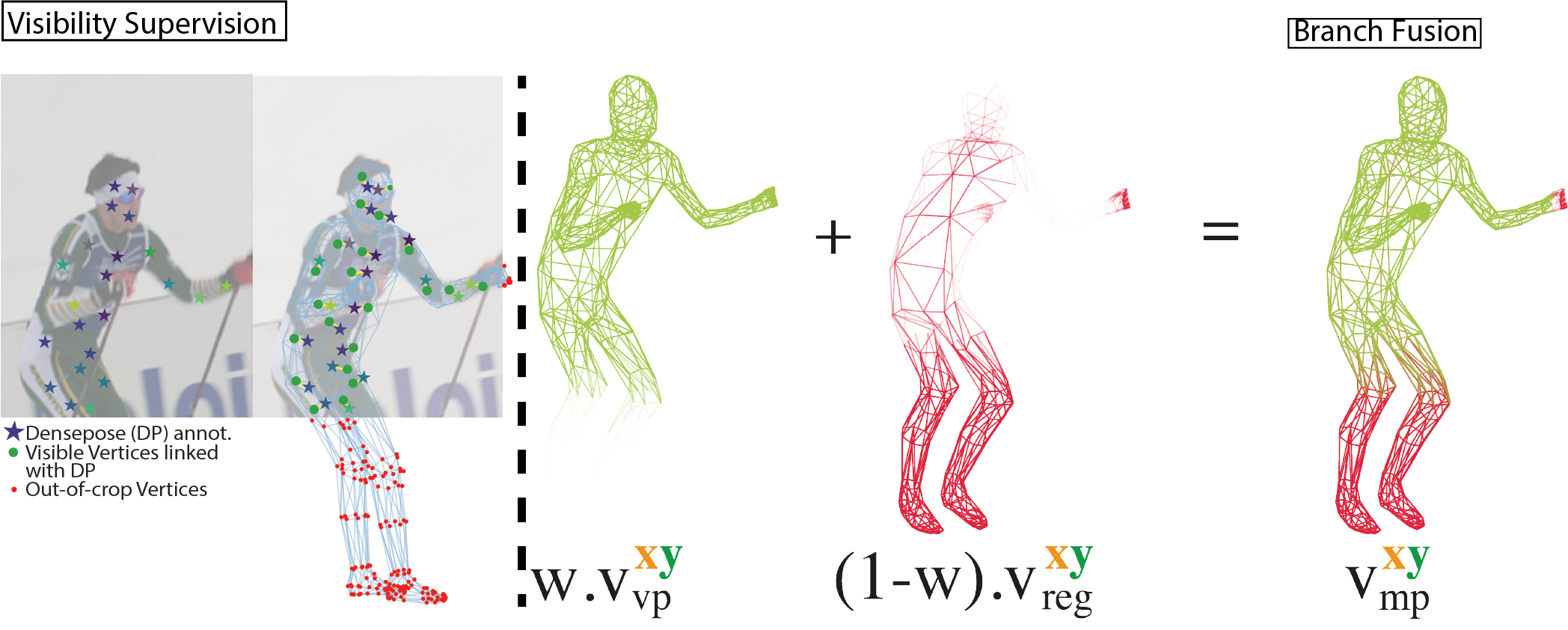

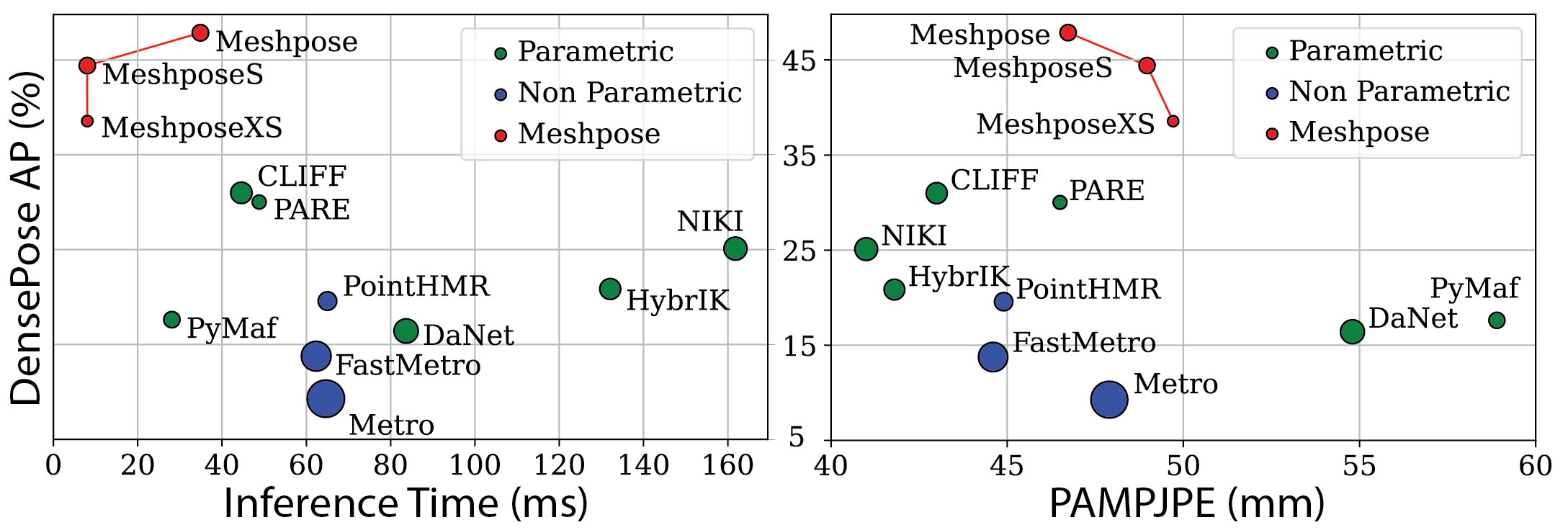

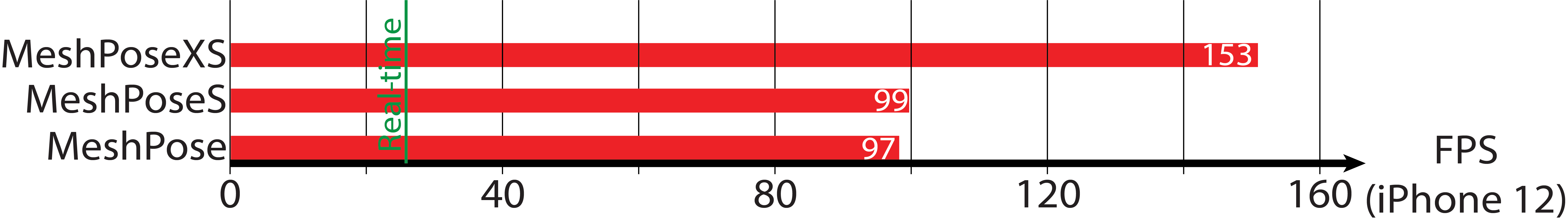

The lower VertexPose branch extracts multiple heatmaps from which, by applying the spatial argsoftmax operation, it computes precise x and y coordinates for all the vertices inside the input crop. The upper Regression branch computes the coordinates (x, y, and vertex depth z) for all vertices, along with their visibility scores w. The score w will take lower values when the corresponding vertex is either occluded or fall outside the crop area. We differentiably combine the VertexPose and regressed coordinates via w to get the final 3D mesh. We densely supervise the intermediate per-vertex heatmaps and the final output with UV, mesh and silhouette cues to end up with a low latency, image aligned, in-the-wild HMR system